Perspectives

Will AI ever be completely fair?

23 April, 2021 | Written by: Karine Faucher

Categorized: Perspectives

Share this post:

Amidst the celebrations and anticipation of the good that AI can bring, cases of bias in AI have rightly been called out. Bias in AI is cited as a main factor for why, in Edelman’s 2021 Trust Barometer, trust in tech is at an all-time low.

As AI’s capabilities expand, can we assume that more AI will simply mean more bias? Or will solutions from researchers, governments and AI developers root out bias? Indeed, fairness in AI is expected to be addressed in the UK government’s AI strategy, due to be published later this year. The strategy, aimed at unleashing the transformational power of Artificial Intelligence, has “Ethical, safe and trustworthy development of responsible AI” as a major focus. An AI Roadmap submitted to the UK Government by the independent advisory body, the AI Council, states that “There is a fundamental need to consider transparency and data bias as public sector adoption increases.”

IBM recently brought together 35 leading journalists and influencers with four of AI’s foremost experts. In a pacey, succinct session the speakers addressed burning questions about AI fairness under four headings. Below is a summary of their views. As moderator of the panel I’ve captured the the highlights of the discussion so everyone can explore this important topic.

1. The various dimensions of AI fairness – How can bias in AI generate discrimination?

Fairness in AI has less to do with technology and more to do with the existing frameworks behind it. To address bias we need to go “beyond data, algorithms and models” to also look at the background of those who design and deploy AI, said Catelijne Muller, President of ALLAI, an organization advocating for responsible AI. “Bias comes from the general power dynamics in society” said Gry Hasselbalch, senior researcher and co-founder of DataEthics.eu. When AI is seen to discriminate, it’s often because AI models are adopted uncritically and given priority over human decision making. Gry emphasized that “we need to preserve our human critical agencies to always be able to challenge biased AI decisions.”

AI bias is something new to grapple with when it comes to human rights, said Catelijne, who has advised the Council of Europe (that she calls “the house of human rights”) on laws around AI. People have the right not to be discriminated against, but it goes further than that – people can only be arrested if there’s a reasonable suspicion of a crime. If AI is used for predictive policing that uses correlations based on shared features with other cases, a person might be arrested merely because of shared features, without any reasonable suspicion.

2. How to keep human bias out of AI?

“When we start trying to clean bias out of systems, it’s like a game of whack-a-mole” said Virginia Dignum, Professor of Responsible Artificial Intelligence at Umeå University. She stressed that we cannot completely remove bias from AI systems just like we cannot completely remove bias from humans. Nonetheless, practices such as data calibration can be used for fairer AI results: data from less-represented and more-represented groups are combined, so that the system is trained in a calibrated and a balanced way.

But the real questions are “How much risk do we want to take? And what is the threshold for acceptance?” said Virginia. “This is a broader societal question which should not only be left to algorithm developers.”

IBM Fellow and AI Ethics Global Leader, Francesca Rossi, also emphasized the education and training of developers – on tools and methodologies but also on their awareness of their own biases. Additionally, bias in the system should be transparently communicated. For example, IBM uses the concept of an AI factsheet that developers complete to help everyone understand what kind of bias is in the system. Explainability – allowing people to understand how decisions were made – is also practiced by IBM and can be useful to identify bias. But while technology will help, Francesca’s ultimate goal is “to advance people through technology, making sure they’re more aware of their values and they’re more conscious about their actions and decisions.”

3. Will the EU regulation framework on AI be a game-changer?

Note that this discussion was held before the EU’s April 21 publication of proposed AI rules.

“AI does not operate in a lawless world,” said Catelijne Muller. From labour laws to the GDPR, “there already exists a vast legal field that also applies to AI.” Moreover, the obligation exists to explain certain decisions whether AI was used or not. For example, a judge would have to explain how a decision came about and a government is legally obliged to explain why someone was refused social benefits. Catelijne refutes the claim that AI regulation could hamper innovation “First of all, if it hampers anything, it hampers harmful innovation. And secondly, regulation creates a level playing field where you know that your competitor is bound by the same rules as you.” With the GDPR the EU has shown it’s a trendsetter in the regulatory space. If the EU sets the right boundaries with the AI regulation, the rest of the world needs to live up to those if they want to reach 500 million Europeans. Whatever is proposed will be negotiated in the context of power and interest of different EU institutions, the EU member states, and their priorities and alliances, according to Gry Hasselbalch. Virginia Dignum added that European policies around technology should focus on the impact of digital technology in general, irrespectively of whether we call that technology AI or not.

4. Where do we stand from a research perspective?

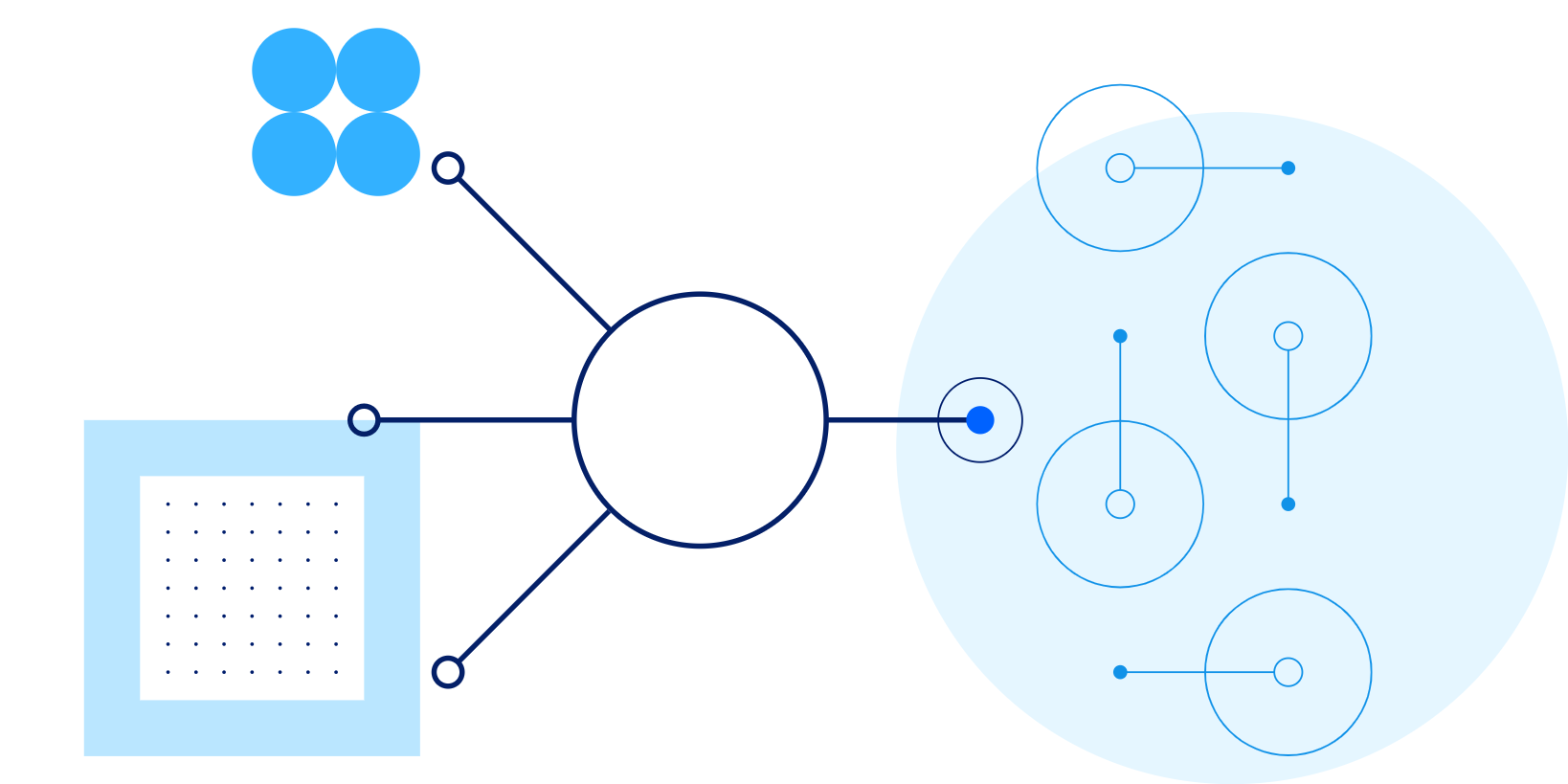

Expandability, transparency, and verification of AI systems is the focus of research for Virginia Dignum. This involves creating boundaries for AI systems and then verifying that the systems can be trusted to stay within the boundaries. It also involves looking at building modular AI systems and looking at how the composed model behaves in terms of fairness, bias etc in comparison to each of its modular parts. This research is crucial since it is probable that, in the future, there will be a market of AI components that can be used across Europe by many different people.

Francesca Rossi and her team are focused on three key areas of research. The first is how to visualize bias in AI models that are analyzed by third parties. The second is targeted at collective decision-making: how do we achieve a reasonable trade-off between properties like fairness, privacy, and social welfare? And thirdly, how to leverage cognitive theories of human reasoning and decision making to advance AI’s capabilities to make decisions and to support human reasoning.

The four speakers were all part of the EU’s High Level Expert Group on AI, that worked in 2018-2020 to give advice to the European Commission on its AI Strategy by defining the Ethics Guidelines for Trustworthy AI.

Watch the roundtable

Influencer Relations Leader, IBM Europe

Generative AI: driving a new era of HR transformation

Helen Gowler, Partner, EMEA Talent & Transformation Lead Today, I’m proud to be part of a company that’s committed to addressing gender bias in the tech industry. IBM is pioneering the use of AI to tackle this issue, and I’m excited to contribute to this effort. Our team is developing AI models that can detect […]

Multi-Modal Intelligence Platform

Traditionally, data management systems provided only numerical or textual based business intelligence primarily for back-office users across finance, sales, customer management and supply chain. Today, we are increasingly seeing data management systems which drive key business functions requiring interrogation of multi-modal data sets from documents, presentations, images, videos to audio. This demands a more sophisticated […]

The use of GenAI to Migrate and Modernise Organisational Core Programming Languages

GenAI is hugely powerful and supports a diversity of use cases by focusing on routine work – allowing people to focus time on value-add tasks, thus enhancing productivity. The focus of this use case is for an organisation which had previously focussed on a legacy set of tooling and programming languages and needed a way […]