Big Data

Live Blogging from the Cognitive Colloquium

October 13, 2015 | Written by: Steve Hamm

Categorized: Big Data | Cloud Computing | Cognitive Computing | Data Analytics | IBM Research | IBM Watson

Share this post:

7:00 PM ET

Embodied Cognition

Session Chair: Dr. Guru Banavar, Vice President, Cognitive Computing Research, IBM Research

Dr. Myron (Ron) Diftler, Robonaut Project Leader, NASA Johnson Space Center

Dr. Gaurav Sukhatme, Co-Director, Robotics Research Lab, University of Southern California

Paul Hermes, Entrepreneur in Residence, Medtronic

Grady Booch, Chief Scientist for Software Engineering, IBM Research

Some nuggets:

Paul Hermes

We’re testing robots as surgeon’s helpers. As robots get cheaper and better, we’ll get them into operating rooms. Right now we’re using robots for the most high-skilled tasks. Over time, we’ll use them elsewhere in the operating room on more routine tasks.

Gaurav Sukhatme

We’ve been working on underwater robots. There are three physical frontiers for robots: space, within the body and in deep ocean. These are difficult places for humans to get and operate.

A key capability is being able to navigate from A to B. Underwater, if you don’t take active measures, your robot will be gone. You have to have predictive capability about where the ocean will take your robot. The explosion of data and new models has made it possible for us to put vehicles underwater for weeks on end and have them navigate themselves.

Another challenge is the fact that it’s difficult to communicate underwater, so you have to squeeze your communications down to a few bits.

Also, to set location, them robot has to come to the surface. That takes time and energy. So you have to deal with these specific challenges.

Guru Banavar

Where do you see the high-value economic and social applications.

Grady Booch

Every industry has some degree of robotics for which there’s an opportunity—farming, retailing, healthcare. The more difficult and interesting situation is systems that combine robotics with cognitive or thinking abilities. You want to be able to converse with the robot. You want ti to interact with you. Eldercare is one important scenario for these kind of socially aware robots.

Guru Banavar

Panelists, do the robots you’re developing use sophisticated cognitive capabilities today

Ron Diftler

We don’t have cognitive capabilities in Robonaut 2. We focus more on capabilities and supervisory control than autonomy. We want to go to autonomy and cognition, but we’re not there yet. When we’re doing complicated tasks like maintenance, we want the robot to work on its own rather than waiting for instructions from earth for every step.

Gaurav Sukhatme

Our underwater vehicles are operating alone for weeks at a time—making decisions on their own. We’re working on a project where marine biologists specify what microbes they want to look for. We fed the robot information about where those microbes would most likely be found. It went down and found the microbes.

Paul Hermes

Very few surgical robots have cognitive ability. We need to think of them as one element in the system, the operating room. And we need to ask what cognitive abilities can we add to the operating room. If we can do things to improve the efficiency of the environment around the robot, we can make healthcare more affordable and make it more accessible to more people.

Guru Banavar

People tend to focus on humanoid robots. Why is that?

Grady Booch

We’re at the next generation of human-machine interface. We want to interact with things that are like us. The humanoid is the ultimate computer interface.

Ron Diftler

I believe that robots will be able to read body language and emotional states.

Grady Booch

We’re going to need robot phychologists, too.

———

6:30 PM ET

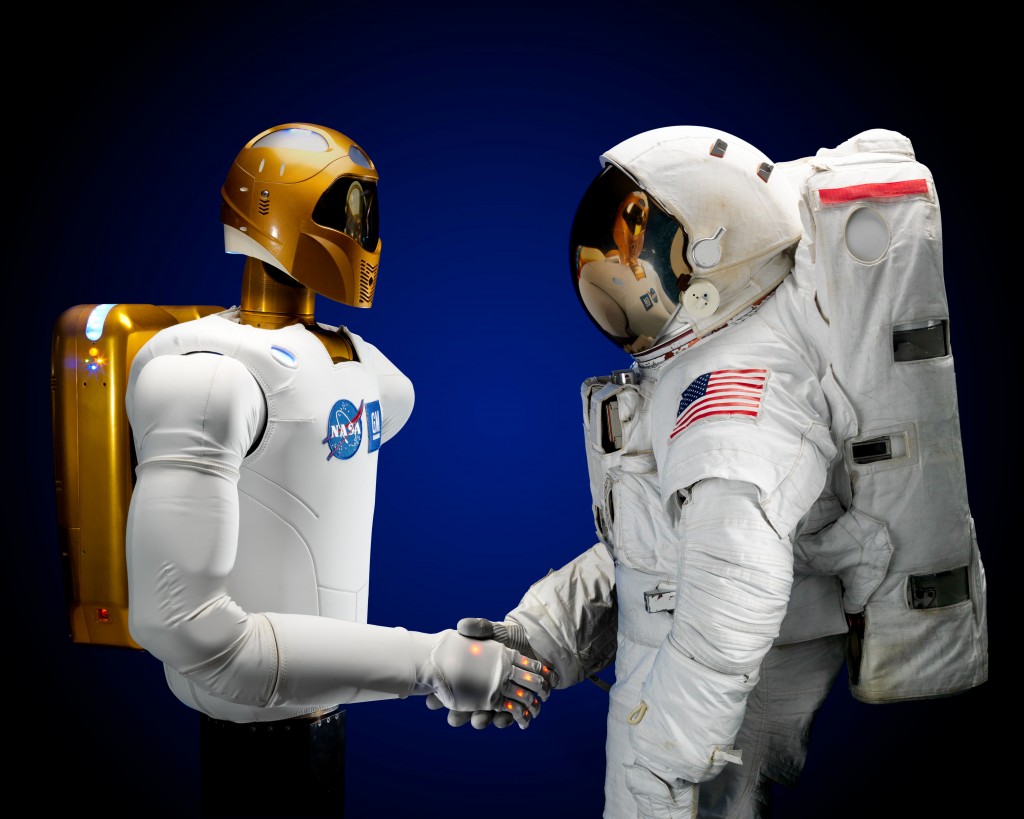

Robonaut 2 – Working with Humans on Earth and in Space

Dr. Myron (Ron) Diftler, Robonaut Project Leader, NASA Johnson Space Center

I’ve been working on this for 18 years, so I have a lot of history behind it.

The motivation—creating an assistant for astronaut. It’s for the dull, dangerous and dirty tasks, so humans don’t have to do them

Robonaut isn’t here to replace astronauts. It’s to augment them.

We started the program in 1998. We were testing robots in the Arizona desert. GM came to us. They wanted to help factory workers with challenging tasks. They liked Robonaut I. We started working with them in 2007 on Robonaut 2 development. It’s a robot from the waist up.

It has very fluid motions. The and it very imprint. It can grasp a pen and tools. It has four degrees of freedom. It’s capable of a wide variety of grasps.

Robonaut 2 has to be able to do real work. It can lift more than 20 pounds.

We have force control, so it doesn’t exert force that’s unacceptable. An astronaut can easily restrain its movements.

We operate it either by controlling it remotely from a computer, or by using teleopereator equipment—where the human acts out the motions we want the robot to make.

They’re capable of non verbal communication. If a human reaches out for something the robot is holding, the robot will extend it toward the humans let go at the right moment.

In 2011 we launched the system into space. They unpacked the robot on the space station—the first humanoid robot in space. The first thing it said was “hello world” in American sign language. It shook hands with astronauts. We trained it to press buttons and flip switches, and to use tools. It used baby wipes to clean handrails. It caught a spinning roll of duct tape.

Next, we want to teach the robot to move and work outside the space station. We now have a robot that has legs.

We have a spinoff called RoboGlove. It’s a glove that a person uses to increase their grasping strength.

—————

5:15 PM ET

Building and Evaluating Cognitive Systems

Session Chair: Rob High, IBM Fellow, Vice President, CTO Watson

Dr. Jaime Carbonell, Allen Newell Professor of Computer Science, Carnegie Mellon University

Dr. John Laird, The John L. Tishman Professor of Engineering, University of Michigan

Dr. Jerome Pesenti, Vice President, Core Technology, IBM Watson

Rob High

What are the demands on the underlying technology from these AI technologies

Jaime Carbonell

There’s lots of data but little knowledge associated with it, few labels. Also a lot of the structure is unstructured, lots of different types of data, and some of the data has a lot of noise. It’s not that liable.

John Laird

I work on cognitive architecture. My goal is general intelligence. I want to be inspired by humans. There’s a control issue. How do you make a system like this run and have coherent behaviors. As the world changes we want our system to change and adapt, too.

We need associational memory systems.

Also, we want our system to do online realtime learning—to learn from its own experience. You want to make that happen in the background so it doesn’t interfere with getting its tasks done.

You want the system to be able to make decisions in 50 milliseconds, which humans can do.

Rob High

We think of systems as the mechanics of the software and hardware. But there are also people and processes in systems. What are the challenges there?

John Laird

It has to be responsive. People won’t wait for a long time. You have to establish trust. Humans have to be able to count on the system behaving in a certain way. And, finally, while it’s doing it thing, if you can stop and ask it questions about why it’s doing something, that’s important, too. If you’re driving your car and it does something well, it would be nice if you could ask it why it did something—and it would explain.

Jerome Pesenti

We take technologies that work well on paper and deploy it in the real world. That’s the big challenge we face. We give systems to the customer. We ask for training data. And the customers often don’t really understand what the training data should be or they don’t collect the data. So we have to work through that. Also people are incentivized to over-estimate the quality of the system. So you have to go into this with a scientific method so you’re asking the hard questions and getting the right data.

John Laird

You have to train your experts to give you the whole story—to not over simplify what they do and what they know.

Rob High

How do you measure success?

Jaime Carbonell

The answer is get more data and train more, and do iterations back to the experts to get their feedback.

Measuring is difficult. In machine translation there isn’t necessarily a “correct” translation. That’s why you need to get samples from a number of experts—crowdsourcing. That’s the closest you’ll get to ground truth.

Jerome Pesenti

You measure the value of the system to the people who use it. We tell people that Watson is learning, so they understand that it will get better with time.

Rob High

What would you love to build in a cognitive system?

Jerome Pesenti

I’d like to build a system with very weakly supervised learning—like the way young children learn from their parents. It’s weakly labeled data.

Jaime Carbonell

There’s one fundamental way that computers are different from humans. They can be cloned. If you train one to do something very well, you can make many copies. For instance, you can train a machine to do neurosurgery, but then you can make a million machines to do the surgery. It’s much more efficient. I want to build systems that are the best in the world at certain valuable tasks. They you make a bunch of copies of the system.

John Laird

I want to understand artificial intelligence and human intelligence—using all the forms of intelligence and reasoning. And I want my system to to be able to learn while it’s thinking. I want a task that requires all of these things. We call it interactive task learning. We teach a system a new task from scratch. We’re working on this project. It’s going to be a robot, real time, with all the processing on the robot. It’s going to learn while it does the task. You give it a task and a goal. It learns and tries, but it can also ask for help. Over time, it doesn’t have to ask for help anymore.

In the future, when you get a new robot from the store, it knows a lot because it has basic training, but it learns more by talking to you and interacting with you and the world. You don’t reprogram it. You talk to it and it learns on its own.

Jerome Pesenti

We train systems initially on general knowledge—with language and with Wikipedia the like. Then when it’s shipped to a customer it has to be trained more, and then it learns.

John Laird

We’re a long way from being able to give computers common sense knowledge—the knowledge that isn’t in Wikipedia.

Jaime Carbonell

One of the challenges is knowing what you don’t know. So a system has to be able to ask questions. Once you give one robot common sense knowledge, though, you can give that knowledge to all of them.

John Laird

There are a lot of hard problems in AI. Every 10 years something comes along that advances our understanding. Deep learning is one. But there are a lot of issues that have to be resolved. Put it this way: I don’t worry about job security.

———-

4:45 PM ET

Proactive Learning and Structural Transfer Learning – Building Blocks of Cognitive Systems

Dr. Jaime Carbonell, Allen Newell Professor of Computer Science, Carnegie Mellon University

Cognitive computing, or AI ++, as we discussed calling it this morning, is front and center at CMU. I’m going to focus on machine learning in my talk.

We’ve seen a lot of discussion of deep learning and deep neural networks—for vision, speech and natural language processing.

Also you have reinforcement learning—in robotics. You have large-margin methods for classification and graphical models for domain knowledge and strong priors.

But how do you cope with label sparsity? This is a challenge. Very little of the data in the world is labeled.

I’ll focus on some techniques that address that challenge.

One is Active Learning. You combine training data, functional space, fitness criterion and sampling strategies.

Active Learning assumes you have an oracle who can give you the right answer—one oracle and one right answer. But that’s not the way the world works. You need a crowd of experts as teachers. You need a crowd to train the system.

(Pro)Active Learning is using multiple sources of information and assessment of their expertise.

What if your oracles or experts change over time. They gain more expertise, or they don’t keep up. You want to be able to track that change. We monitor multiple oracles and identify the ones that diverge from the consensus.

We have applied Proactive Learning to early detection of malware. Is something likely to be malware—something the system hasn’t seen before—based on the similarity of its behavior to things that have already been identified as malware?

We also have applied active learning to machine translation. It turns out that you get better language translations from humans if you tap two moderately good translators than if you rely on one better translator. We learn from this.

The second technique we use for coping with insufficient data is Transfer Learning. The basic idea is that you map invariant properties from similar tasks that the machine performed and learned about previously.

These techniques work. We’re applying Transfer Learning to referral networks. The sources of information could be Watson modules, or human experts or crowd sourcing. Proactive Learning is working. The two augment each other.

———-

2:45 PM ET

Applications of Machine Learning

Session Chair: Dr. Michael Karasick, VP Innovations, IBM Watson

Dr. Yoshua Bengio, Professor, Department of Computer Science & Operations Research, Université de Montréal

Dr. Fei-Fei Li, Associate Professor, Computer Science Department, Stanford University

Dr. John Smith, Senior Manager of Intelligent Information Systems, IBM Research

Some nuggets:

Michael Karasick

What won’t we be able to do with deep learning?

Fei-Fei Li

Different people have different definitions of deep learning, some very narrow. The quest toward AI especially in my area of computer vision is advancing from perception to cognition to reasoning. We’re just starting. We’re good at perception. But there are a slew of cognitive tasks that we don’t have our hands on yet. The solutions are still on the horizon.

Yoshua Bengio

There are challenges, but we don’t know what’s impossible. Brains do a pretty good job at some of these tasks. So there are methods. If we can learn them we can used them on machines.

John Smith

The recent advances taught us that there were classes of problems that were very difficult but they’re not solved. Labeled faces in the wild? Solved. There’s a lot more our there. In vision, we know little about how to teach a computer what the world looks like. But we have a focus on these things and we can scale them to a larger scale of challenges.

Michael Karasick

What about the notion of why? As opposed to “what” these things do.

Yoshua Bengio

Sometimes humans are able to explain their choices, and sometimes they can’t. We trust them because often they make the right decisions. The “why” is hidden in complex situations. Ultimately we can’t have a completely clear picture of why a person or a machine answers in a certain way. At some point the machines will be able to talk back in English about what’s going on inside.

Fei-Fei Li

We’re working on videos, using attention models. We’re trying to understand the why question or at least make the model interpretable.

John Smith

Systems can learn from deep learning, but it’s difficult for humans to understand how they’re reasoning. We have to think about how we’re going to design the systems so they’re not only coming to conclusions but also so they can present it information in ways that understandable to humans.

Michael Karasick

The notion of what’s an application is a function of who you are. Say you’re in an industrial domain—banks, insurance companies, governments, healthcare organizations. They have deep domain knowledge. We use it to train systems like Watson.

Fei-Fei Li

Knowledge is made of many layers. Deep learning is just one set of techniques.

Michael Karasick

Are scientists in this field taking on enough grand challenges?

Yoshua Bengio

We spend too much time going on the low-hanging fruit. The big ideas that take more thinking and time pay the price for that. As a community we’re way too much into the short term questions and not enough on really understanding.

Fei-Fei Li

We have to really push science to the next levels.

I’m thinking now about the next set of challenges. There are some coming up. In one, they’re doing an 8th grade science exam. In my own lab, we’re going after deeper knowledge structures in images, focusing on relationships and attributes rather just on categorization.

I also hope people will look at videos—Internet videos and robotic videos.

John Smith

Video is still ripe. We need more progress. We need to do captioning of video ,and questioning and answering on video. We need to combine the language with what we see to give a deeper understanding of the world. That’s the point: How can we have a much deeper understanding of the world—what’s going on in our daily lives.

Fei-Fei Li

The evolution of the brain is not a pristine thing. Nature just patches things together. So the brain and the development of computer intelligence both emerge dynamically.

Yoshua Bengio

In neural networking, the theory tends to come after the discoveries. We make discoveries and then we ask why.

Intuition is crucial in this. Mathematical analysis is difficult to predict.

———–

2:15 PM ET

Large Scale Machine Learning

Dr. Yoshua Bengio, Professor, Department of Computer Science & Operations Research, Université de Montréal

Deep learning is an approach to machine learning. It’s inspired by the brain. We look at mathematics principles that could explain learning by the brain. They learn levels of representation and abstraction.

About 10 years ago there was breakthrough in using deep networks. You’re able to have more abstract concepts, which allows the machines to generalize better.

Deep learning has revolutionized speech recognition and object recognition. Now it’s affecting computer vision, natural language processing, dialogue, reinforcement learning and robotics, etc.

Neural nets are now going beyond their traditional realm of perception.

There are challenges to scaling towards artificial intelligence.

One is computational. The bigger the models we’re able to train, the better they are. A second, is understanding language using a form a reasoning. We’re using recurrent networks. Using different pieces of evidence, and different kinds of reasoning. The other main challenge is unsupervised learning—large-scale unsupervised learning. We need a lot of data, and most of it won’t be labeled.

Computation is a key factor. With current algorithms, if we can make our neural nets 100 times faster and bigger, we can make a great amount of progress.

The current technology is between a bee and a frog, in terms of synapses in their brains. So we’re making progress.

The language understanding challenge. There has been a lot of progress in the past few years. I was part of the beginning of extending neural networks to natural language. We’re teaching the system word semantics. The systems capture analogies even though they were not programmed to do so. Take the vector for queen and subtract the vector for king, and that is analogous with the relationship between woman and man. The machine discovers the attributes. It understands gender.

The next step is achieving semantic representations for word sequences.

We’re using end-to-end machine translation with recurrent nets and attention mechanisms. It’s a new way of doing machine translation.

Now we’re using similar techniques from translating from image to English. We can use this technique to generate captions for the images. You learn from the mistakes, too. It helps you understand what’s going on inside the machine.

We’re using this work for knowledge extraction. The machine reads Wikipedia and retains the knowledge so it can answer questions of fact.

There have been recent advances in unsupervised learning, using data that hasn’t been tagged and labeled by humans.

——–

1:00 PM ET

Brain Inspired Computing

Session Chair: Dr. Jeff Welser, Vice President and Lab Director, IBM Research – Almaden

Dr. Terrence Sejnowski, Francis Crick Chair, The Salk Institute for Biological Studies

Dr. Horst Simon, Deputy Director, Lawrence Berkeley National Laboratory

Dr. Dharmendra Modha, Chief Scientist of Brain-inspired Computing, IBM Research

Some nuggets:

Terry Sejnowski

The big change in neuroscience is we can read your mind. We can see into your brain.

Horst Simon

What do we have to scale computers to make them 1000 times more powerful in a decade. Computers are requiring more and more power. We have to look at alternate solutions. We look at the brain for inspiration. It’s a 20 watt computer. All the computer industry, everything we do today is still Von Neumann architecture. It has a fundamental flaw. Data has to travel too far and to much. It takes too much time and energy. We should be inspired by the brain as we design new architectures.

Terry Sejnowski

A big breakthrough will come when we figure out how to compute using spikes.

Dharmendra Modha

Our TrueNorth chip is a supercomputer the size of a postage stamp running on a hearing aid battery. Now we need to get people to use it and create applications. We distributed the technology to dozens of researches in universities and laboratories.

Horst Simon

All the scientific instruments we’re using are generating data at an unprecedented rate. They live on the Moore’s Law curve. We need to understand how we can optimally use the data. Humans can’t look at all of the data. There’s a great opportunity to use this kind of technology and scaling it up. I can imaging the TrueNorth chip being put into supercomputers and focused on large data analysis.

Terry Sejnowski

The US BRAIN Initiative brings together people from different scientists—such as nano scientists and neuroscientists. The neuroscientists know what the questions are, and the nanoscientists know how to solve problems. So it’s essential for them to work together.

Horst Simon

We should bring a large group together to see how these new technologist can be applied to the high performance computing challenges faced by the US national labs.

Dharmendra Modha

Nothing we achieved in the SyNAPSE project could have been achieved without collaborations between us, universities and the national labs.

Horst Simon

When I was in junior high school. I got a kit to build a simple computer. I could play Tic Tac Toe. I beat the computer. I asked myself, “Is the computer upset I beat it?” The answer was no. It’s just a bunch of wires and lights. But this was the beginning of my desire to become a scientist. Everything we discussed today is nothing but wires and lightbulbs, really. I don’t think anybody here thinks Watson will get upset if it looses.

Terry Sejnowski

Humans love. They hate. They kill. The real turning point will come when we use computers to understand ourselves well enough to be able to start to address some of these behaviors we have, the hate and violence. That will happen in our lifetimes. Understanding ourselves will be the ultimate breakthrough.

Don’t trust consciousness. it’s very unreliable. There’s no reason why nature had to build a brain that understood how it worked. Consciousness is overhyped.

———

12:30 PM ET

Cognitive Computing – Past and Present

Dr. Terrence Sejnowski, Francis Crick Chair, The Salk Institute for Biological Studies

Making predictions is very difficult, especially about the future.

I’ll take you on a trip through AI history

Let me take you back to 1987. I was asked to give a lecture at MIT. It was the era of AI—rules-based systems. I walked into a big room. The entire AI lab was there—faculty and students. They were hostile to my thinking. I said here’s a honeybee, with 100 neurons. We humans have a billion. Yet I pointed out that a bee can see and has other advanced sensing capabilities. They’re specialized.

Digital computing was based on Boolean logic.

We have been focused on logic for the past 50 years, but probabilities has really been the valuable part. The past 30 years have been about how to compute probabilities.

We had very simple computers, such as the slide rule, and the string computer—a lot of effort is put into educating people, so we can come up with answers quickly.

What kind of computer is the brain? It’s not a digital computer. It’s not a single computer. There are hundreds of problems solved by specialized circuits. Now, examine the brain, we’re deconstructing the circuits to see what’s going on. But in many cases we don’t have a clue.

You can see neurons as processors.

We have many learning algorithms. We know the brain has dozens of learning systems working together.

We have neural networks that are 20 layers deep, like the brain.

Most algorithms don’t scale, and you need scaling to create machines that think like the brain.

Deep learning is bringing major advances. Elements of the neural networks are based on the cell behavior of the brain. The parts of the network have analogs in the biological system.

One aspect isn’t captured by these neural networks. You need recurrent connections—feedback loops.

Now we’re marrying deep learning with recurrent neural networks. Combining deep learning with memory.

Back to the bee. It’s a champion learner. If you give a bee a reward, sucrose, and associate it with a color of a flower, it will got back to that flower and will even tell other bees about it.

We have neurons that help us make decisions, dopamine pathways.

Jerry Tesauro of IBM Research produced TD Gammon, a machine that could play backgammon. It was a learning machine, even more important than Deep Blue, the chess playing computer, which used brute force to win at chess.

Another major advance i neuromorphic engineering. Much more efficient computing is possible. Cheaper, less power.

Everything you see is a pattern of spikes in your brain.

We will be able to create visual systems that are at least as good as ours. We’re not trying to duplicate the brain, but using the brain as inspiration for what we do.

The US has The BRAIN Initiative; the EU has the Human Brain Project. China hasn’t yet announced their project. They planned on focusing on human brain diseases. Then the military got involved. They smelled a technology that would be important for the future of China, and they were willing to put in a lot more money—about $100 billion. It’s a grand challenge. Could have a huge impact on the country, and the world.

Looking back at history, we’re going through a major transition. In the 20th century physics breakthroughs took decades to come into products. In the second half of the 20th century, DNA transformed biology. Precision medicine will transform healthcare. It takes a long time to go from scientific advance to affecting everybody.

Now I’ll make a prediction. It’s clear to me that everything we do depends on computers, including neuroscience. But we also have created nanoscience, allowing us to build smaller devices. And nature was here long before us. That’s the secret of the brain. How do you handle huge amounts of information. All of these mysteries will be worked out in the next few decades. They all have information in common. DNA is information. The brain is the organ for handling information. How do you organize, access and apply it. These are the big challenges we have. We’ll be able to achieve these advances only through collaboration with machines.

My prediction: The future is going to be even bigger data.

————

12:00 PM ET

The Future of Cognitive Computing

Dr. John Kelly, Senior Vice President, Solutions Portfolio & Research, IBM

Here’s a way to think about this. It’s very easy as we get into cognitive computing and AI, to rapidly talk about deep learning and algorithms. We’ll talk a lot about that today. But we must keep in mind: Towards what end. To me it’s all about the outcomes—changing the world and getting insights that impact business and society.

Last night at the Churchill Club cognitive computing event some body asked what is Watson worth? I can’t put a number on it. But what’s the coast of not knowing—and not being able to cure cancer or address the world’s big problems. In just healthcare alone, there’s a trillion dollar cost of not knowing.

It’s tempting to talk about what we’re doing as replicating the human brain. That’s not what we’re about. We’re not trying to mimic the human brain. We’re building systems that are capable to handling all of the data that’s becoming available today. Much of that information is dark. We saw that we need an entirely new computing system to deal with that.

It’s like dark matter in the universe. We can’t observe it but we see the effects of it. This is the equivalent in the data space. Think of the solutions that are in that data if we can get to it.

We’re inspired by what the human mind can do, but that’s not the objective.

Think about the industries that could be affected by this. Oil and gas. A huge opportunity. The industry spends billions for a rig. Too often them isa the reservoir, to they don’t drill and extract it optimally. Retail. Tweets and Facebook posts provide incredible insights about consumers for retailers. The Internet of Things. It’s one of the great next frontiers. Images. Machine to machine data—which is noisy and instructed. It’s perfect for cognitive computing. Cities, where you’re dealing with safety and traffic. Security. It’s no longer about building firewalls. It’s about understanding people and their behavior. Healthcare. It’s one of the biggest. An enormous industry ripe for not just digital disruption but cognitive disruption, as we bring in new data and insights. Transportation. Self-driving vehicles will have to be cognitive so they can make instant, smart decisions.

Historically, we’re at an incredible inflection point in the history of computing. The first era was the tabulating era, we automated basic tasks such as arithmetic. The second era, the programmable era. We put the interactions into the computer and let it run. But now there’s no way we can program and keep up with the exponential growth of data, and interact with data in real time.

Now, in the cognitive era, we’re creating entirely new computer systems that do things entirely differently than during the programmable era.

Cognitive computing, the way we’re doing it at IBM, is a platform, just like the IBM System/360 was back in the 1960s. It’s a platform that others can build upon.

I have been amazed at what happened since February, 2011, when Watson competed on Jeopardy. This area has exploded in industry and academia.

Where do we need to go with this as humans?

The match captured people’s imaginations, but it also set up man vs. machine.

That’s not it. It’s not man vs machine. All the studies show that man plus machine will do better than either can do on their own.

I don’t see systems having compassion, intuition, value judgements. That’s for the humans. The machines will help us penetrate complexity.

In man plus machine. we can take the best experts at something and make them even better. We can take the rest of the people and make them as good as anybody else in the world.

So the key is how do we get the synergy between man and machine.

Lots of people are doing this. Voice recognition. Image recognition. Natural processing. But they’re single solutions. They’re like single tools. Only IBM is building the entire toolkit, like we did with the System/360, and turn it into a platform.

We took the original Watson and put it out on our cloud for others to use. Then we added other cognitive technologies. Young people in 12 hours at our hackathons are building meaningful applications on Watson in a day or two.

We could have sold a lot of Watson boxes. But we decided to make it cloud based, a platform, for transforming industries. It’s the platform for our ecosystem. We have hundreds of companies building on it; dozens of universities.

We have a pipeline of these Watson APIs, there services, that are coming. It just keeps getting richer.

It’s about learning at scale from data; it’s about reasoning over the data, with a purpose; and it’s about interacting with humans. The magic of man plus machine will create leapfrog capabilities over the coming years. It’s not about automating and programming systems. The possibilities with this are immense. With Watson, image analytics, machine learning—we can change the face of healthcare.

Thomas Watson Jr. said, “computing will never rob man of his initiative or replace the need for creative thinking. By freeing man fromm the more menial or repetitive forms of thinking, computers will actually intrease the opportunities” for humans to use our human skills and capabilities.

With cognitive, it’s no longer about replacing the menial and repetitive tasks. It’s about augmenting human capability and solving problems that couldn’t be solved before.

Meet the Newest IBM Fellows

Since the first class of IBM Fellows in 1962, IBM has honored its top scientists, engineers and programmers, who are chosen for this distinction by the CEO. Among the best and brightest of IBM’s global workforce are 12 new IBM Fellows who join 293 of their peers who have been so recognized over the last […]

Accelerating Digital Transformation with DataOps

Across an array of use cases, AI pioneers are employing a core set of new AI capabilities to unlock the value of data in new ways. According to the 2019 IBM Global C-suite study, leaders are using data 154% more to identify unmet customer needs, enter new markets, and develop new business models. These leaders […]

How IBM is Advancing AI Once Again & Why it Matters to Your Business

There have been several seminal moments in the recent history of AI. In the mid-1990s, IBM created the Deep Blue system that played and beat world chess champion, Garry Kasparov in a live tournament. In 2011, we unveiled Watson, a natural language question and answering system, and put it on the hit television quiz show, […]