The fabric of big memory

Technologies as diverse as Apache Spark, SAP HANA and NoSQL databases all share a common architectural feature – the use of in-memory data stores. Moving data sets from traditional storage media into memory can dramatically reduce system latency and increase application performance. These data sets are growing ever larger, however, leading to requirements for what might be described as “big memory.”

Unfortunately, dynamic random access memory (DRAM) can be literally hundreds of times more costly than hard disk drive storage and up to 10 times the cost of flash,[1] making the use of DRAM for very large data sets prohibitively expensive. Also, DRAM-based solutions still require persistent storage, and when a cache miss fails over to disk, the performance of these next-generation applications can plummet.

To address such challenges, some IT architects have turned to flash storage. Flash can serve as a lower-cost, persistent extension of existing DRAM while often providing better performance compared to hard disk-based storage. For these reasons, among others, big memory solutions built with flash storage have gained popularity.[2]

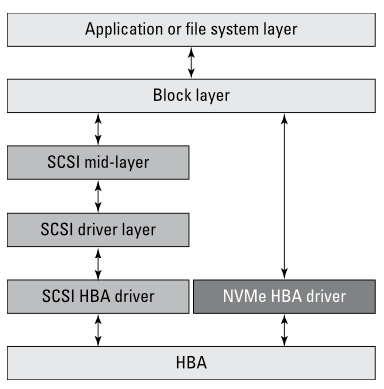

The question now becomes: “How do we build ultra-low latency big memory solutions that take advantage of the reliability and performance offered by flash?” As it happens, one essential key is the interface between the server farm and the flash storage. Data traffic between the CPU and storage traverses many components, all of which add some latency, including the processor to backplane latency, the operating system (OS), the file system, interface protocol, network and storage system latencies. Various technologies are in play across each of these components, with different options affecting the total latency, cost and efficiency of the overall solution. To dramatically reduce the latency of the storage interface, a technology receiving plenty of attention these days is the Non-Volatile Memory express over Fabrics (NVMe-oF) protocol.

NVMe-oF allows host hardware and software to more fully exploit the microsecond latency provided by all-flash arrays. It reduces I/O overhead between CPUs and storage, resulting in performance improvements compared to previous interfaces such as SCSI that were originally developed for use with far slower hard disk drives.[3]

NVMe-oF allows host hardware and software to more fully exploit the microsecond latency provided by all-flash arrays. It reduces I/O overhead between CPUs and storage, resulting in performance improvements compared to previous interfaces such as SCSI that were originally developed for use with far slower hard disk drives.[3]

NVMe is already being utilized for internal PCIe-based SSDs. Driven by the need for much larger memory capacities and greater scalability in order to perform even bigger analytics jobs using Spark, SAP HANA, or cognitive solutions such as IBM PowerAI Vision, the IT industry has developed NVMe over Fabrics (NVMe-oF) to extend NVMe onto storage networks utilizing Fibre Channel, Ethernet or InfiniBand.

NVMe-oF can enable IT architects to connect external storage resources such as all-flash arrays with hundreds of terabytes and even petabytes of capacity to analytics engines over an ultra-low latency storage network. Essentially, thanks to the promise of NVMe-oF, the size of in-memory applications can be unlimited, without compromising any of the reliability and data services provided by mature network protocols and enterprise-class storage systems.

These days, big memory is big business. For example, market research suggests that by 2020, NoSQL sales will reach $3.4 billion that year alone, driven by a 21 percent annual growth rate.[4] Scaling up web infrastructure on NoSQL databases has proven successful for Facebook, Digg, and Twitter, among many others.[5] SAP recently announced that the S/4HANA adoption rate doubled year over year in 2017 to more than 5,400 customers, with 1,300 signing up in the fourth quarter alone, including Nike and Ameco Beijing. Clearly, the market for large, high-performance data stores and big data analytics is substantial.

These days, big memory is big business. For example, market research suggests that by 2020, NoSQL sales will reach $3.4 billion that year alone, driven by a 21 percent annual growth rate.[4] Scaling up web infrastructure on NoSQL databases has proven successful for Facebook, Digg, and Twitter, among many others.[5] SAP recently announced that the S/4HANA adoption rate doubled year over year in 2017 to more than 5,400 customers, with 1,300 signing up in the fourth quarter alone, including Nike and Ameco Beijing. Clearly, the market for large, high-performance data stores and big data analytics is substantial.

Big memory and the big business it enables will drive the adoption of highly scalable, latency-busting solutions such as NVMe-oF. Technologies originally designed to support millisecond-speed disk and overnight batch processing are giving way to microsecond-speed storage supporting real-time analytics of enormous data sets.

With NVMe-oF, you can have your cake, as big as you want, and eat it now. Stay tuned for what’s in store at IBM with NVMe and NVMe-oF.

[1] Content and image source: IBM Systems White Paper: Transforming real-time insight into reality, September 2017 TSW03555-USEN-00

[2] Content and image source: IBM Systems White Paper: Transforming real-time insight into reality, September 2017 TSW03555-USEN-00

[3] Image source: NVMe over Fibre Channel for Dummies, Wiley Brand Brocade special edition 2017 ISBN: 978-1-119-39970-4

[4] NoSQL Market Forecast 2015-2020, Market Research Media, January 2016 (https://www.marketresearchmedia.com/?p=568)

[5] IBM System White Paper: Transforming real-time insight into reality, September 2017 TSW03555-USEN-00