.

Why it is no overstatement to call IBM Z / IBM LinuxONE the Mother of all platforms in Virtualization?

June 14, 2021 | Written by: Anbarasan Sekar

Categorized: . | IT infrastructure

Share this post:

Let’s begin with a little bit of history

IBM invented the “Hypervisor” in the 1960s for its mainframe computers. As anybody associated with the IT infrastructure, from the Cloud Solution Architect to the Data Center Personnel, would understand and agree: “Cloud computing wouldn’t be possible without Virtualization, and Virtualization wouldn’t be possible without the Hypervisor.” So, it should make any Mainframer proud to know that the roots for ‘Cloud’ and ‘Virtualization’ were first laid on IBM Z, which incidentally is the Mother of IBM LinuxONE as well.

But what are Hypervisors?

A Hypervisor is a software or a firmware that enables multiple operating systems (or operating system instances) to run alongside each other, sharing the same physical computing resources. Another way to define could be: A Hypervisor is a kind of emulator that creates and runs virtual machines (VMs). It separates VMs from each other logically, assigning each its own slice of the underlying computing power, memory, and storage. This prevents the VMs from interfering with each other.

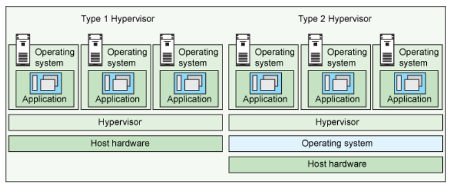

Just like you can define Hypervisors in different ways, there are also different categorizations of Hypervisors. Some call firmware-based (microcode-based) hypervisors as Type-1 – such as the IBM Z & IBM LinuxONE Processor Resource / System Manager (PR/SM) and the IBM Power Hypervisor (pHyp), and all software-based hypervisors as Type-2 – such as IBM z/VM, KVM, VMware, Microsoft Hyper-V etc. Another categorization calls all native or bare-metal hypervisors as Type-1, whether firmware or software-based – So IBM z/VM, Microsoft Hyper-V and VMware ESXi would all be Type-1 based on this classification, and all hosted hypervisors that run on a conventional operating system are Type-2 – such as Oracle VirtualBox, Parallels Desktop for Mac etc.

One categorization of hypervisors is depicted in the figure below:

As for your first-choice Hypervisor, instead of focusing on these categorizations, it would be good to understand the server virtualization approaches and the implementation methods of the hypervisors and then make your decision.

Why Virtualize in the first place?

Virtualization involves a shift in thinking from physical to logical, treating IT resources as logical resources rather than separate physical resources. Using virtualization in your environment, you will be able to consolidate resources such as processors, storage, and networks into a virtual environment which provides the following benefits:

- Consolidation to reduce hardware cost.

- Optimization of workloads.

- IT flexibility and responsiveness.

Virtualization is the creation of flexible substitutes for actual resources — substitutes that have the same functions and external interfaces as their actual counterparts but that differ in attributes such as size, performance, and cost. These substitutes are called virtual resources; their users are typically unaware of the substitution.

Did Virtualization and VMs solve all problems?

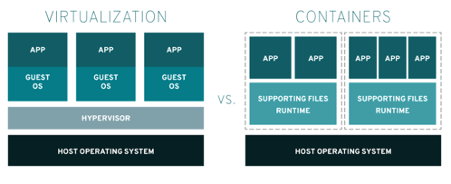

In traditional virtualization, as we’ve seen, a hypervisor is leveraged to virtualize physical hardware. Each VM then contains a guest OS, a virtual copy of the hardware that the OS requires to run, along with an application and its associated libraries and dependencies. This makes VMs typically run into gigabytes and also makes them less portable because of their dependencies on the OS, application and libraries.

This paved the way for Containers, which are small, fast, and portable alternatives to VMs, that do not need to include a guest OS in every instance and can, instead, simply leverage the features and resources of the host OS. Containers take advantage of a form of operating system (OS) virtualization in which features of the OS are leveraged to both isolate processes and control the amount of CPU, memory, and disk that those processes have access to. Containers first appeared decades ago with versions like FreeBSD Jails and AIX Workload Partitions, but most modern developers consider 2013 as the start of the modern container era with the introduction of Docker.

Below Figure shows the difference in packaging between VMs & Containers:

The Containerization paradigm

Software needs to be designed and packaged differently in order to take advantage of containers—a process commonly referred to as containerization. When containerizing an application, the process includes packaging an application with its relevant environment variables, configuration files, libraries, and software dependencies. The result is a container image that can then be run on a container platform.

As companies began embracing containers, the simplicity of the individual container began fading against the complexity of managing hundreds (even thousands) of containers across a distributed system. To address this challenge, container orchestration emerged as a way for managing large volumes of containers throughout their lifecycle, including:

- Provisioning

- Monitoring

- Resource allocation and Redundancy

- Scaling and load balancing

- Moving between physical hosts

While many container orchestration platforms were created to help address these challenges, Kubernetes, an open source project introduced by Google in 2014, quickly became the most popular container orchestration platform, and it is the one the majority of the industry has standardized on.

Red Hat OpenShift packages Kubernetes as a downstream enterprise container orchestration software that’s undergone additional testing and contains additional features not available on the Kubernetes open source project. Red Hat OpenShift Container Platform is a fully managed Red Hat OpenShift service that takes advantage of enterprise-ready scaling and security.

From physical partitions to VMs – to Docker Containers – to Kubernetes Orchestration – to Red Hat OpenShift Container Platform – Is there one platform that supports ALL and supports ALL of them in parallel?

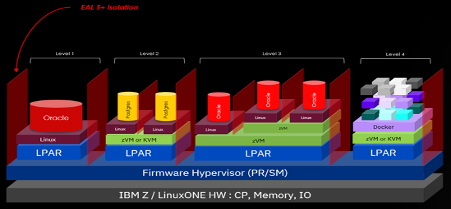

Thanks to Virtualization on IBM Z & IBM LinuxONE – not only are all of these supported, but these are augmented with enterprise-grade security, reliability, availability, serviceability & scalability.

Virtualization on IBM Z & IBM LinuxONE:

Level 1 Virtualization: Shows an LPAR running Linux natively

Level 2 Virtualization: Shows VMs running on z/VM or KVM Hypervisor

Level 3 Virtualization: Shows nesting of z/VM Virtual Machines

Level 4 Virtualization: Shows Linux containers that can either run as stand-alone containers or can be orchestrated / managed by a Kubernetes instance or Red Hat OpenShift Container Platform

A quick summary of other major features of this “Mother of all virtualization platforms”

- The Evaluation Assurance Level 5+ (EAL 5+) Isolation that has been highlighted on the top left of the picture above, indicates that workloads on these PR/SM LPARs on an IBM Z or IBM LinuxONE system enjoy the same level of isolation that workloads running on separate physical servers enjoy. This ensures a very secure environment for workload in every LPAR.

- IBM Z or IBM LinuxONE can run web, app, DB, and many different databases as well as open-source & commercial software all within the same server.

- Highest-rated security amongst all commercial Linux Servers with FIPS 140-2 Level 4.

- Highest reliability / availability ratings among all commercially available servers.

- Reduced software license costs and reduced TCO due to fewer number of cores required.

References

IBM z15 / LinuxONE III announcement letter

LinuxONE III product information

Insurance Company Brings Predictability into Sales Processes with AI

Generally speaking, sales drives everything else in the business – so, it's a no-brainer that the ability to accurately predict sales is very important for any business. It helps companies better predict and plan for demand throughout the year and enables executives to make wiser business decisions.

Never miss an incident with an application-centric AIOps platform

Applications are bound to face occasional outages and performance issues, making the job of IT Ops all the more critical. Here is where AIOps simplifies the resolution of issues, even proactively, before it leads to a loss in revenue or customers.

How ICICI Prudential Life Insurance is Scaling Customer Care and Leveraging AI to Personalize Experiences

Organisations are constantly challenged to meet dynamic customer requirements and rethink ways to engage with them on their terms and as per their convenience. With customers at the core of decision making and business success, organisations are tuning to digital capabilities that can support new-age services. When done well, after sales service boosts the overall customer experience by providing […]