Digital Reinvention

Give AI Fairness a Fair Chance

October 5, 2018 | Written by: SAMEEP MEHTA

Categorized: Digital Reinvention

Share this post:

Last week, I had an opportunity to talk about AI and Fairness to 100+ Business and Technical Leaders at IBM THINK Event at Mumbai. Today IBM announced launch of Trust and Transparency Cloud Services and Open Sourced AI Fairness360 Toolkit (github link), I thought this is a good time to share what I talked at THINK Event.

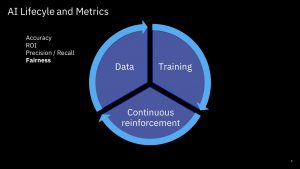

Let’s look at AI Lifecyle at a very high level. Primarily consists of three phases –the first phase is Data Phase which includes activities like acquisition, cleaning, sampling, feature extraction and other operations to make data ready for the next step. The next step is training, there is where magic happens, intricate patterns are discovered, decision boundaries are learnt, and the data is transformed into actionable business insights and recommendations. Finally, AI system is always evolving, it interacts with environment, learns from usage, ingests more data & feedback to continuously improve. We will come back to this towards the end of the talk.

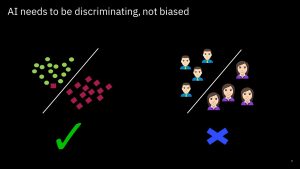

Let me add a mildly provocative and counter intuitive statement “DISCRIMINATION is NECESSARY” . To put in context, lets move to next slide

Every successful AI algorithm learns and exploits discrimination in the data to learn decision boundaries — to tell bad loans from good loans, malign tumors from benign tumors.This discrimination is desirable property of AI algorithms except when the discrimination results in bias or unfair decisions. For example consider the scenario on the left where features are used to learn correct decision boundary whereas the scenario on the right is obviously wrong because the decision boundary is based on a protected attribute like gender. Obviously once such a model is put in use, it will generate unfair recommendations. Many regulations (like equal credit opportunity law, employment laws) prohibit decisions on basis of age, gender, ethnicity etc. Hence it is important to catch such biases before AI is put to work.

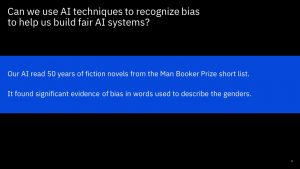

Now it is not sufficient to have a intuition about presence of bias. We need computational method to detect and quantify the biases. Unless we can measure it we cannot improve it. The big question is ” how can technology help to detect & remove such biases“. Can AI be self correcting?. In other words, can we use AI to build fair and unbiased AI“.

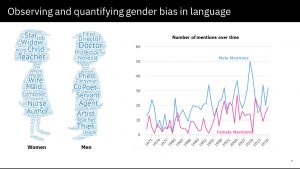

We decide to look at two common themes — Movies and Books. These are picked as exemplar to show how user generated content can have bias. We used Watson API and novel AI algorithms to discover bias in Bollywood movies as well as Man Booker Short listed books, Both data sets span more than 50 years of data. The algorithms range from text mining, image understanding, video understanding and graph based algorithms to characterize importance of each character, how they are portrayed etc. Let us look at unsurprising but disappointing finding which are computationally discovered

- Men are described with words like hardworking, brilliant, honest, clever, whereas women are often pretty, young, helpless.

- Even the importance of characters they play is not equal. For example male characters are referred to more than twice the female characters in summarized plots.

(For full details of our algorithms, detailed results, please refer to our paper Bollywood_Analysis & Booker_Analysis)

Why is this detection of such bias in data important for AI. Let us go back to our AI Lifecyle

An AI system is as good as the data it is trained on. An AI model trained on movies and books data, will always correlate hard working and Doctor with Men and helpless and nurse with females. Imagine putting such AI to use to consul students on career choices !! Therefore, it is important to be aware of such biases and remove them before training. However, bias could manifest itself using training phase by feature learning and also during continuous learning phase via new data or new interactions.

In the always learning and evolving AI world, fairness is not an one time activity. We should consciously and continuously look to optimize for it.

As IBM has been advocating, in next few years, only the fair and unbiased algorithms will be put to use for business for regulatory requirements as well to gain customer trust. It is important for business leaders to treat fairness as first class metric just like other business metrics like ROI. At the same time technologist should build support for fairness in Data and AI platforms just like robustness, security and performance.

We, at IBM, are developing novel algorithms to detect and remove biases across all stages of AI systems.

———————————-

It was a great educational event for me. Where else would I hear inspirational talks from leader like Harriet Green and Karan Bajwa, Customer centric technology from Sriram Raghavan & other industry leaders and deep technical topics like Lattice Cryptography & Quantum Computing from Arvind Krishna and Praveen Jayachandran.. All in less that 2.5 hours !!!

PS — I represented a large set of colleagues and 18 months of work including Aleksandra Mojsilovic, Kush Varshney, Michael Hinds, Nishtha Madaan, Diptikalyan Saha, Manish Bhide . All the errors in slides and narrative are mine only. This was supposed to be very high level pitch on fairness. For more detailed technical discussion, feel free to ping me at sameepmehta@in.ibm.com or sameepmehta3 @ twitter

Just launched – IBM Security Command Center in India

IBM Security Command Center launch in Bengaluru, India

Insurance Company Brings Predictability into Sales Processes with AI

Generally speaking, sales drives everything else in the business – so, it's a no-brainer that the ability to accurately predict sales is very important for any business. It helps companies better predict and plan for demand throughout the year and enables executives to make wiser business decisions.

Never miss an incident with an application-centric AIOps platform

Applications are bound to face occasional outages and performance issues, making the job of IT Ops all the more critical. Here is where AIOps simplifies the resolution of issues, even proactively, before it leads to a loss in revenue or customers.